AI Workflow Automation Security Best Practices

Key takeaways

- AI workflow automation introduces new risks that require tailored security governance, compliance, and access control.

- Best practices include data encryption, identity and access management (IAM), and secure API integrations.

- A layered security-by-design approach is crucial during the development and deployment of AI workflows.

- Auditing, monitoring, and anomaly detection tools help mitigate risks and ensure continuous compliance.

AI workflow automation is transforming the way businesses operate, accelerating decision-making, reducing manual overhead, and enhancing productivity. From financial forecasting to HR operations and customer service, AI-driven workflows are now central to modern digital ecosystems.

However, with this power comes significant responsibility—security. AI workflows are often built on sensitive data, run through integrated systems, and make decisions autonomously. This creates a larger attack surface and new categories of vulnerabilities—data leakage, model manipulation, shadow AI, and integration flaws.

According to IBM’s 2023 Cost of a Data Breach report, the average breach cost is $4.45 million, and misconfigurations in automation workflows are a growing contributor to this figure.

To protect organisational assets, customers, and intellectual property, organisations must adopt best practices tailored specifically to AI workflow automation security.

Why AI Workflow Automation Security Is Different

AI-driven automation introduces a level of complexity and unpredictability not typically seen in traditional rule-based systems. While conventional workflows execute predefined sequences, AI workflows adapt, learn, and make decisions, which makes securing them fundamentally more challenging. Here’s why:

1. Autonomy

Unlike traditional automation that follows fixed logic, AI models operate on probabilistic reasoning. They process real-time inputs and apply learned patterns to make decisions—sometimes with no human oversight. This autonomy introduces risk: if the AI is trained on biased, poisoned, or incomplete data, its decisions can be flawed or even dangerous. For example, an autonomous finance workflow might incorrectly flag legitimate transactions as fraudulent based on misinterpreted patterns.

2. Integration Depth

AI workflows often connect to multiple systems—ERP tools, CRMs, cloud platforms, databases, and third-party APIs—to gather data or trigger actions. This deep integration creates a sprawling digital surface. If just one of these systems has weak authentication or outdated encryption protocols, it becomes a potential breach point that exposes the entire workflow.

3. Data Sensitivity

AI workflows thrive on data—often large volumes of personally identifiable information (PII), financial records, intellectual property, and business-sensitive insights. This makes them prime targets for cybercriminals. For instance, an AI system automating patient intake in a hospital must handle protected health information (PHI), putting it under strict regulatory scrutiny like HIPAA.

4. Opaque Behaviour

Many AI models—especially those using deep learning—are black boxes. Even when trained effectively, it’s difficult to explain why a certain decision was made. This lack of transparency becomes problematic in regulated industries like banking, healthcare, or insurance, where organisations must demonstrate fairness, logic, and traceability in automated decisions.

Top AI Workflow Automation Security Risks

Nearly one in three organisations struggle with shadow data—untracked or ungoverned information that creates serious blind spots in AI workflows.

AI workflows unlock speed and scalability but introduce unique security risks that organisations must anticipate and neutralise. Here are the most critical ones:

1. Data Exposure

AI systems constantly ingest and process data, and if input streams, training datasets, or operational logs aren’t properly secured, they can become entry points for data leaks. Whether it’s an exposed S3 bucket or improperly masked data, breaches are common when guardrails are weak.

Example: A customer support AI that logs user queries (including names or account numbers) without redaction could inadvertently expose private data in logs or analytics dashboards.

Security concern: Without encryption, access controls, and secure storage policies, these data flows become liabilities.

2. Model Tampering

Attackers may attempt to corrupt the underlying AI logic by injecting malicious inputs (evasion attacks) or altering training data (data poisoning). These techniques allow adversaries to bias or degrade the AI’s decision-making accuracy.

Example: An attacker manipulates the input of a fraud detection model so it no longer flags suspicious activity, giving them time to execute malicious transactions.

Security concern: Model tampering can go undetected unless monitoring and validation mechanisms are in place.

3. Insecure Integrations

Workflows depend on interconnected services—and each connection must be secured. Weaknesses in API authentication, outdated libraries, or lack of TLS can expose sensitive operations.

Example: An AI-powered procurement workflow integrated with a legacy ERP system might transmit purchase orders over unsecured channels, exposing them to interception.

Security concern: Attackers can exploit these connections to inject malicious commands, exfiltrate data, or hijack the automation.

4. Overprivileged Workflows

Many AI bots or services run with excessive permissions, often due to default configurations or lack of role segmentation. If compromised, these workflows can access entire databases, modify records, or shut down critical operations.

Example: A bot automating HR tasks also has write access to payroll systems—a breach in one area exposes all employee data.

Security concern: Overprivileged bots expand the attack surface, making it vital to enforce the principle of least privilege.

5. Shadow AI and Unvetted Models

Employees may use unauthorised AI tools or models—downloaded from GitHub or AI marketplaces—without IT’s knowledge. These shadow AI deployments often lack security vetting, making them potential sources of malware, backdoors, or compliance risks.

Example: A marketing team uses an open-source AI tool for lead scoring, unaware that it sends data back to an external server.

Security concern: Lack of visibility into these tools introduces blind spots in governance, increasing the likelihood of breaches and regulatory violations.

End-to-end workflow automation

Build fully-customizable, no code process workflows in a jiffy.

AI Workflow Automation Security Best Practices

Now let’s explore the most effective, field-tested practices for securing AI-powered workflows.

- Secure Data Handling at Every Stage: AI workflows handle vast volumes of sensitive, often regulated data—from financial records to healthcare diagnostics. Securing this data at each touchpoint is vital.

- Data Encryption: Adopt AES-256 for data at rest to prevent unauthorised access in storage, and enforce TLS 1.3 for encrypted transmission over networks. This is especially critical in multi-cloud or hybrid environments where data hops between services.

- Tokenisation & Masking: Use tokenisation for personal identifiers (e.g., replacing names with tokens in logs), and masking techniques in UIs to avoid exposing full data to users unnecessarily.

- Data Minimisation: Only collect and retain what’s essential for the workflow’s purpose. For instance, strip metadata or truncate logs to eliminate excess historical data that poses security risks if breached.

- Zero Trust Architecture: Implement authentication and authorisation at every interface—whether between microservices or user access points. Trust must be earned, not assumed.

2. Robust Identity and Access Management (IAM)

Strong IAM frameworks ensure that only the right people—and bots—can interact with AI workflows.

- Role-Based Access Control (RBAC): Assign granular roles (e.g., workflow designer vs. execution monitor) and permissions aligned with job duties, using tools like Azure AD or Okta.

- Least Privilege Access: Design access policies so that users can perform only what is necessary. For example, restrict bot access to only specific workflow endpoints.

- Multi-Factor Authentication (MFA): MFA drastically reduces the risk of credential compromise. Mandate it for admin dashboards, workflow approval interfaces, and APIs.

- Service Account Segmentation: Use different accounts for each workflow or module, minimising the risk if one credential is compromised.

3. Secure AI Model Lifecycle

AI models are the engine behind automated decisions. Compromised models lead to faulty, biased, or malicious outcomes.

- Secure Model Training: Use curated, verified datasets and isolate training environments from production systems to prevent data leakage or contamination.

- Model Signing: Digitally sign models post-training and verify their signatures before deployment. This prevents unauthorised changes to AI logic.

- Input Validation: Sanitise real-time inputs to avoid adversarial examples—data crafted to mislead or crash the model.

- Drift Monitoring: Regularly compare live model predictions with expected outcomes. Tools like Amazon SageMaker Model Monitor can detect anomalies and prompt retraining.

4. API Security and Integration Governance

APIs are the backbone of AI workflow integration—but they are also attack vectors.

- OAuth 2.0 Authentication: Replace static API keys with OAuth-based tokens that expire and require secure refresh processes.

- Rate Limiting and Throttling: Prevent brute-force and DoS attacks by limiting how many requests users and systems can send per minute.

- Input/Output Validation: Apply schema checks and sanitation at both entry and exit points of APIs to prevent injection attacks and data leaks.

- API Gateway Logging: Use gateways like Kong, Apigee, or AWS API Gateway to centralise traffic logging and enforce policy-based access control.

5. Continuous Monitoring and Anomaly Detection

Threats evolve rapidly. Real-time monitoring enables prompt detection and mitigation.

- SIEM Integration: Feed logs from workflow engines into systems like Splunk, QRadar, or Azure Sentinel to correlate events and detect threats.

- Behavioural Analytics: Train models on normal workflow usage patterns. Flag deviations—such as high-frequency execution or access from unknown IPs—for review.

- Audit Trails: Maintain tamper-proof logs to trace decisions and actions. This is critical for forensic investigations or regulatory audits.

- Automated Alerts: Configure alerting systems to notify admins when risky actions occur, such as unscheduled model retraining or unusual data exports.

6. Secure DevOps for Workflow Builders

Many AI workflows are developed using low-code/no-code platforms. These environments must follow secure engineering practices.

- Environment Isolation: Never build directly in production. Separate environments reduce risk of accidental changes or exposure during development.

- Version Control: Use Git repositories for workflow code, scripts, and ML models. Enable signed commits to verify authorship and integrity.

- Code Reviews & Testing: Even no-code environments allow scripting or custom logic. Scan all custom code using static analysis tools and conduct peer reviews.

- Security Awareness Training: Empower workflow builders with knowledge about secure coding, data protection, and platform vulnerabilities. Even citizen developers need cyber hygiene.

7. Governance, Compliance, and Regulatory Alignment

AI workflows touch regulated data and perform actions that affect compliance posture.

- GDPR/CCPA Compliance: Workflows should log user consent, support “right to forget,” and limit access to personally identifiable information (PII) based on region and purpose.

- HIPAA Compliance: For healthcare workflows, log every access and edit to patient data, and use encrypted transmission across devices and storage systems.

- SOC 2 Type II Controls: Implement structured controls for system availability, confidentiality, and processing integrity. Document proof of adherence for auditors.

- AI Model Audits: Regularly evaluate model behaviour for bias, explainability, and accuracy. Especially important in regulated sectors like finance or healthcare.

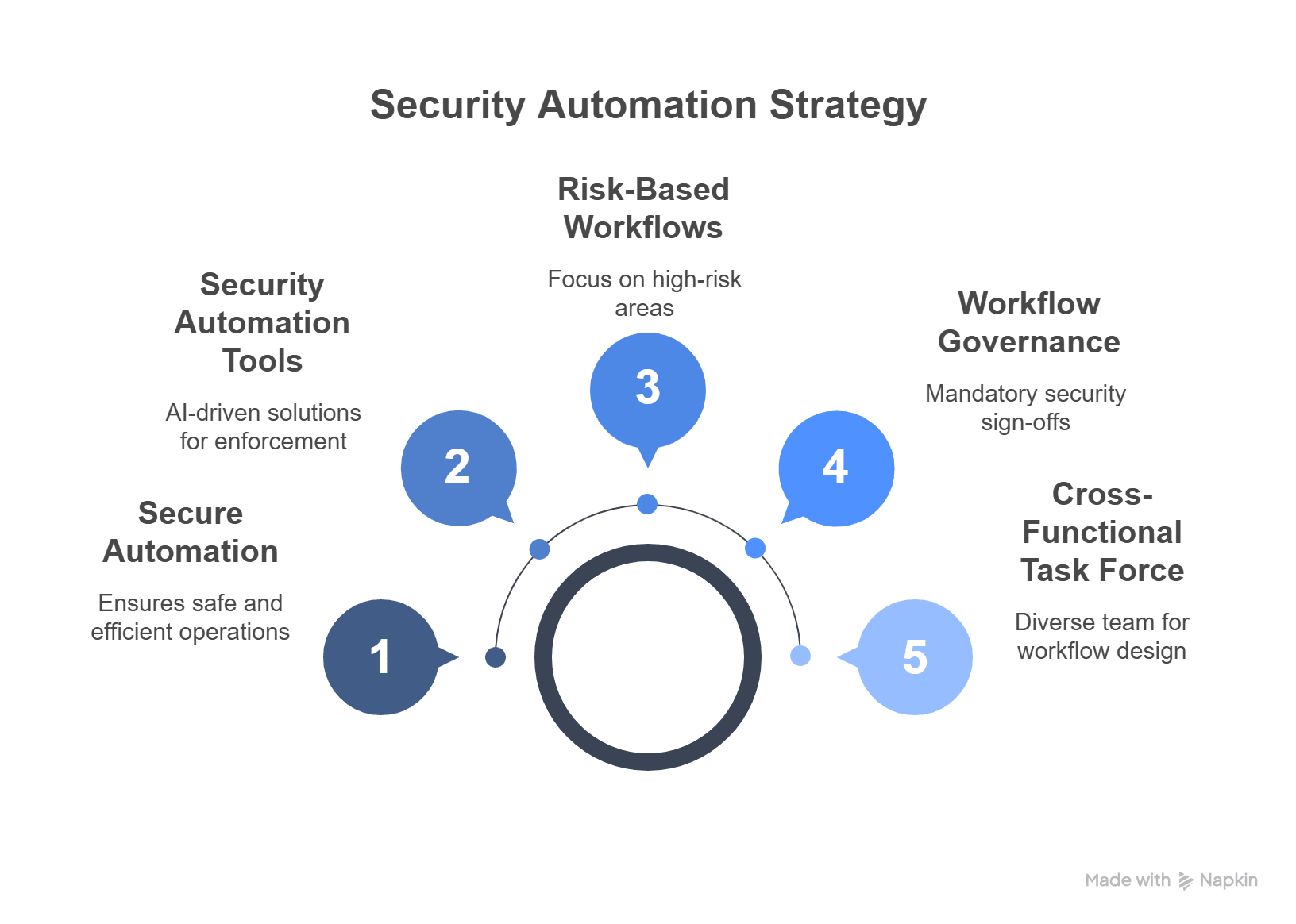

How Business Leaders Can Operationalise These Practices

According to McKinsey, 31% of companies have already fully automated at least one core function—making secure deployment a business imperative. Security must be embedded as a culture and governance layer—not just a checklist.

- Create a Cross-Functional Task Force: Build a permanent team involving IT, data science, security, operations, and legal. This ensures diverse perspectives during workflow design and rollout.

- Embed Security in Workflow Governance: Make security sign-off part of the workflow approval pipeline. Treat security reviews like code reviews—mandatory and documented.

- Prioritise Risk-Based Workflows: Not all workflows are equal. Focus resources and scrutiny on high-risk areas like financial approvals, customer identity verification, or autonomous decisions.

- Invest in Security Automation: Use AI-driven tools for policy enforcement, threat detection, and compliance checks. For example, implement AI to flag misconfigured access policies or unencrypted data paths.

Real-World Security Incidents: Lessons from Major Breaches

Automation and AI can expose critical vulnerabilities when security is neglected. These real-world incidents reveal the impact of misconfigurations, weak controls, and unchecked AI behaviour.

1. EasyJet Data Breach

Between October 2019 and March 2020, EasyJet fell victim to a sophisticated cyberattack that compromised the personal data of approximately nine million customers. The attackers gained unauthorised access to sensitive information, including names, email addresses, travel details, and in some cases, credit card information. The breach was linked to weaknesses in EasyJet’s IT infrastructure and exposed gaps in their cybersecurity posture.

This incident illustrates the critical importance of securing customer data across automated airline booking and customer management workflows. It also highlights the need for regular system vulnerability assessments, particularly in industries that process high volumes of personal and financial data.

2. Sage Copilot AI Misbehaviour

In January 2025, Sage Group—a major UK-based software provider—temporarily suspended its AI assistant, Sage Copilot, after a glitch caused it to expose irrelevant and potentially sensitive business data to the wrong users. Although described as a “minor” fault, the AI assistant’s misbehaviour raised serious concerns around data governance in AI-enabled platforms. The issue was traced back to a flaw in data handling and access controls within the AI’s logic.

This event underscores the risks involved in deploying AI systems without rigorous validation and monitoring. It serves as a reminder that businesses must implement strong data partitioning and access rules before integrating AI into workflow automation.

- Electoral Commission Cyberattack

The UK Electoral Commission disclosed in 2023 that it had been the target of a cyberattack that went undetected for over a year—from August 2021 to October 2022. The breach affected the personal data of approximately 40 million registered voters, making it one of the most significant data exposures in the UK’s public sector. Investigations revealed that outdated systems and weak password protocols were contributing factors.

Although there was no confirmed misuse of the data, the incident raised alarms over the security of critical infrastructure and the vulnerability of electoral systems to digital threats. It reinforces the need for regular security audits, software updates, and strict identity management in government-run workflows.

Future-Proofing Your Security Strategy

AI workflow automation is advancing rapidly, and staying ahead of emerging threats requires a forward-looking security approach. The following innovations are shaping the next wave of secure AI deployment.

Explainable AI (XAI):

As AI becomes more embedded in decision-making workflows, the ability to explain and justify its outcomes is critical. Explainable AI focuses on making algorithmic decisions transparent, traceable, and interpretable by humans. This is essential for maintaining trust, auditing automated actions, and ensuring compliance with regulations like GDPR and the UK’s AI governance frameworks.

Federated Learning:

To train machine learning models without centralising sensitive data, federated learning enables decentralised model training across multiple devices or data sources. This technique reduces the risk of data breaches during training and ensures data remains local—ideal for regulated industries like healthcare and finance where data privacy is paramount.

AI Red Teaming:

Inspired by cybersecurity practices, AI red teaming involves intentionally probing AI systems to uncover vulnerabilities. This includes simulating adversarial attacks, testing model robustness, and identifying security gaps in automated workflows. Red teaming ensures that AI systems can withstand real-world exploitation attempts before they’re deployed at scale.

Secure Prompt Engineering:

As prompt-based AI agents (e.g., GPT-powered bots) become part of business workflows, a new risk emerges: prompt injection. This attack vector manipulates input prompts to make the AI act unpredictably or leak data. Secure prompt engineering focuses on hardening these prompts, validating user inputs, and implementing contextual safeguards to prevent misuse of generative models in workflow environments.

Conclusion

From data breaches to model tampering, organisations must proactively embed security into every stage of the AI lifecycle. A layered, policy-driven approach ensures resilience, transparency, and compliance in today’s evolving threat environment.

Cflow offers a secure, no-code platform to design, deploy, and manage AI-enabled workflows with built-in access controls, data encryption, and audit trails. With Cflow, you can automate confidently—without compromising on security or compliance.

Start your free trial today and build workflows that are not just smart, but secure.

FAQs

1. What are the 4 stages of an AI workflow?

AI workflows typically involve data ingestion, model training, model deployment, and monitoring. Each stage has specific security needs. A secure lifecycle ensures robust, compliant AI performance.

2. What is a security workflow?

A security workflow automates tasks like threat detection, incident response, and access reviews. It helps enforce consistent, auditable security controls across systems.

3. What is the correct automation workflow?There’s no one-size-fits-all. A correct AI automation workflow includes secure data handling, validated AI models, controlled integrations, and real-time monitoring.

4. What is AI security system?

An AI security system uses machine learning to detect threats, manage risks, and automate security tasks. It enhances response speed and predictive threat mitigation.

5. How does automation increase security?

Automation reduces human error, enforces consistent policies, and responds to threats faster. It enables scalable, rule-based security enforcement with real-time alerts.

What should you do next?

Thanks for reading till the end. Here are 3 ways we can help you automate your business:

Do better workflow automation with Cflow

Create workflows with multiple steps, parallel reviewals. auto approvals, public forms, etc. to save time and cost.

Talk to a workflow expert

Get a 30-min. free consultation with our Workflow expert to optimize your daily tasks.

Get smarter with our workflow resources

Explore our workflow automation blogs, ebooks, and other resources to master workflow automation.